Task Specification with LLMs

Talking to Robots Course

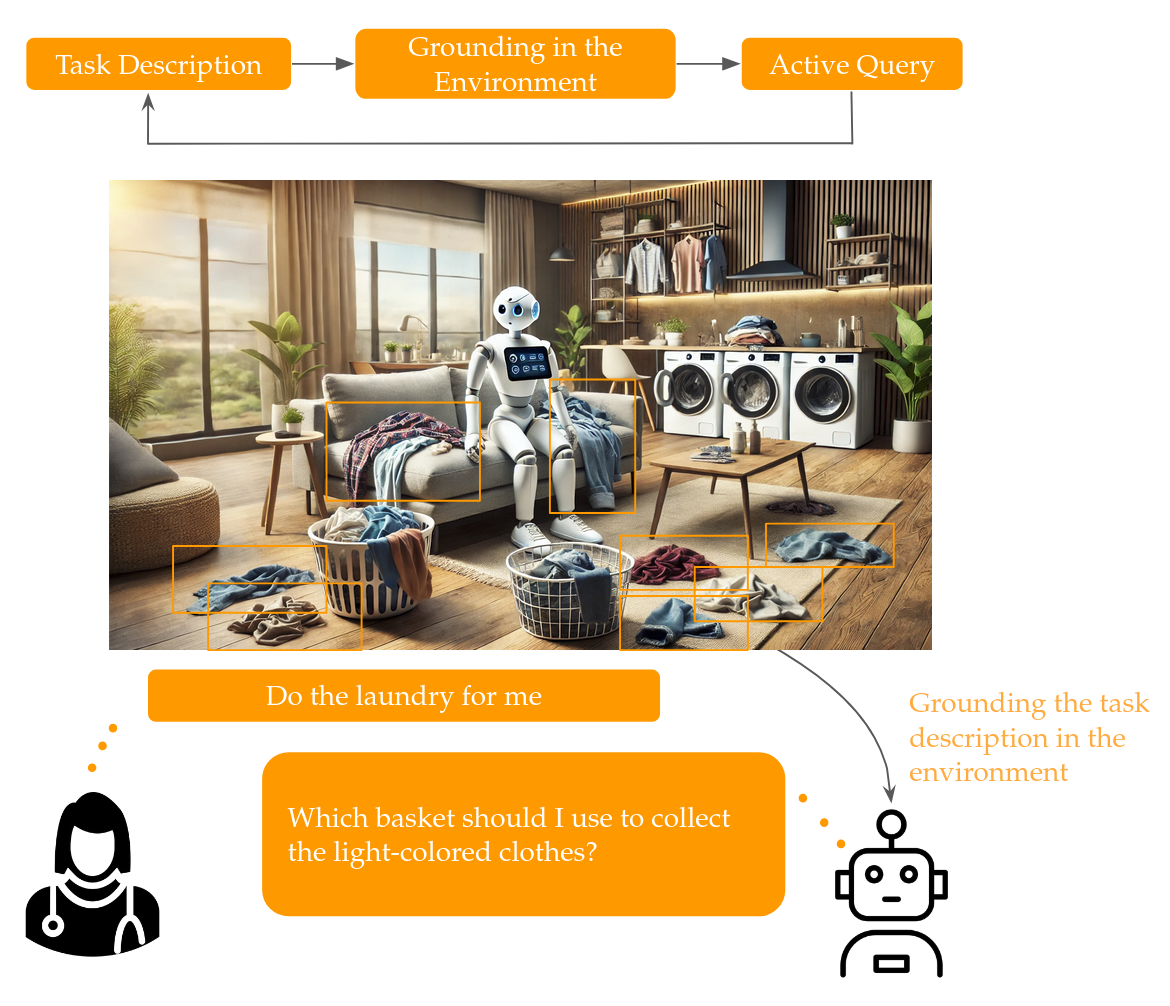

For the Talking to Robots course I took in Fall 2024, our project is on Uncertainty Reduction for Task Specification with Active Querying. The idea is to have an LLM agent that can ask questions and interact with a human to clarify a task and reduce task ambiguity when given a high-level instruction such as “clean the table”, then generate a plan to complete the task.

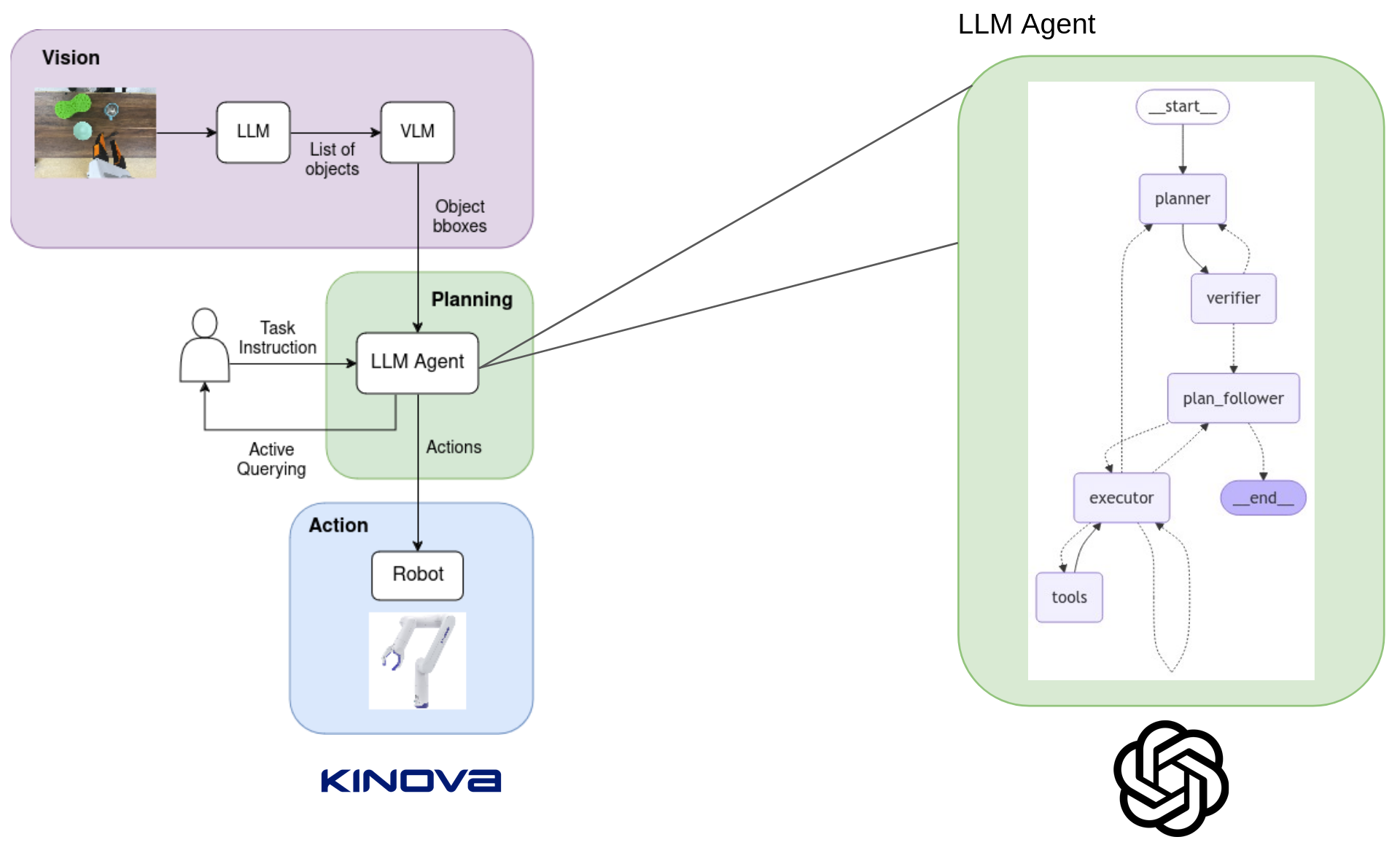

In this project we utilize current state-of-the-art LLMs, VLMs, and are deploying on a Kinova robot arm. Our pipeline consists of three modules:

- A vision module that does open-vocabulary detection of objects from images captured by an overhead camera.

- The planning and querying module that consists of 3 LLM agents working collaborating to reduce planning uncertainty

- The physical robot platform with a low-level action API

Example 1: Sort the objects on the table

A sample task completion is shown below for the initial instruction “Sort the objects”. Through querying, the agent identifies the sorting preference is by object color, then carries out a plan with reduced ambiguity.